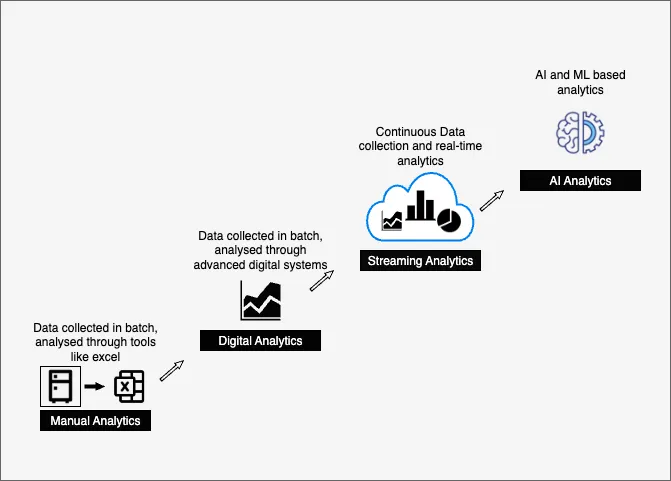

Evolution of Industrial Data Analytics

Over the last two decades, manufacturing industry has witnessed significant digital transformations in the way the data is collected, processed, and utilized for decision-making.

Phase 1: Manual Analytics

In the early stages, the data collection was manual, where there was no dedicated software to automatically collect, process, and store the data. Tools like Excel and other file systems were used for recording the data, do processing and generating analytics. At that time, decision-making used to take months because preparing and presenting data to stakeholders was a time-consuming process.

Phase 2: Digital Analytics

The revolution came with industry-specific digital software that is developed for presenting analytics, data collected in batches and entered into the software, and reports are automatically generated in a presentable form. So this reduces the time to make decisions because generating analytics through data is done beforehand. Hence, decision-making is simplified and advanced, but still, it was reactive, not preventive, because data is coming in batches.

Phase 3: Streaming Analytics & OT-IT Convergence

The introduction of real-time streaming data enabled Operational Technology (OT) for streaming live data on-the-fly, automated decision-making, and further notification to stakeholders. It changed the OT middleware heavily from a legacy monolithic system to a real-time event-driven system. Also, it bridges the OT integration with IT by integrating the sensor telemetry data, asset environmental info, ERP, MES, and CRM systems.

Phase 4: AI/ML Integration

Real-time streaming of data enabled the industry with cloud analytics and AI/ML-based decision-making. To achieve this event-driven system at a high scale, technologies like Apache Kafka, Apache Spark, and Apache Flink played a crucial role. These are proven and well-known tech stacks in distributed computing, in which Kafka works as a distributed message broker, while Spark and Flink provide data transformation capabilities.

Unified Data Model

The traditional systems first collect data from sources, do local processing, and persist that information in databases. Data gets transferred in batches to other cloud systems and IT systems manually or through an automated process. Most of the time, it repeats the same processing in multiple systems and leads to inefficiencies, including:

- Delayed Insights — Data received by other systems is too late to react; sometimes, that is just relevant for future learning only, and no immediate actions can be taken

- Process Redundancy — Same process runs at multiple places, processes the same data multiple times.

- Data Redundancy — Data is kept by multiple applications, hence duplicated storage, increased complexity, and cost

- Disconnected AI/ML — completely decoupled, and works as a passive entity.

The Unified Data Model (Data Unification) addresses the gap between OT and IT; Kafka, along with Apache Flink and Spark systems, enables the applications to consume and produce data in real-time to a shared data layer.

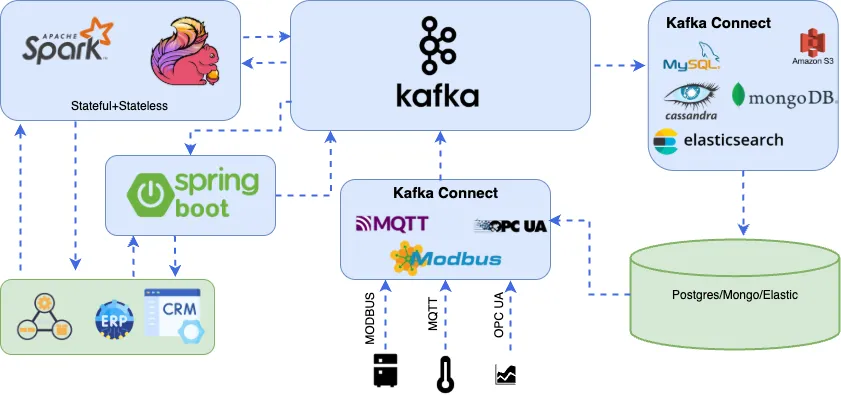

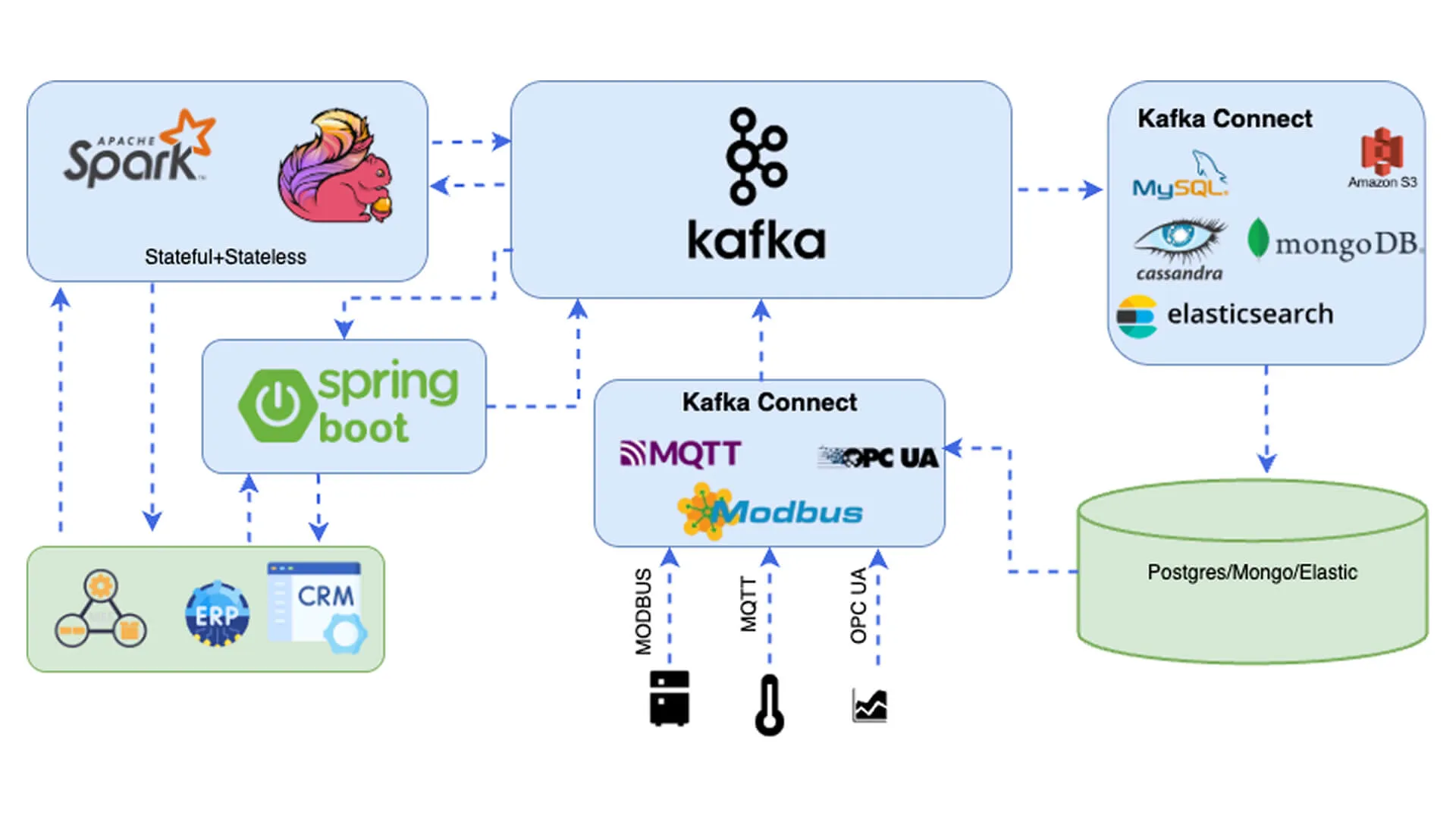

Core components of the architecture

Kafka Connect (Source) — Kafka source connectors solve major problems through data transformation from a wide range of protocols and formats. It provides a common interface for receiving data from external systems and sending it to Kafka.

Kafka — A distributed streaming data platform, applications produce and consume data on a particular channel called “Topic“. It is distributed in nature and is an enabler for parallel processing.

Processing (ETL/ELT) — Multiple compute applications receive data from Kafka, do the ETL and ELT processing, and publish results back to Kafka. The well-known real-time processing systems are K-streams (Spring Boot), spark’s continuous streaming, and Apache Flink.

Kafka Connect (Sink) — Kafka Sink Connectors receive data from Kafka and persist it into various destinations. It supports a wide range of RDBMS, NoSQL, cloud storage, and Message Queues.

Benefits of Unified Architecture

- Eliminate redundant processing — Raw data gets processed once, and it’s consumed by all other applications

- Clear responsibility segregation — Big processes are broken into small dedicated pieces; Highly Cohesive and Loosely Coupled

- Real-time data processing — Streaming jobs process data at real-time to near real-time, which enables immediate decision-making and stakeholder awareness

- Scalable architecture — Small and dedicated functions that can be scaled easily on the basis of behavior, size, and frequency.

- Reduced Cost — The Outcome of one action can be utilized by multiple applications, i.e., if raw data is transformed once, that is available on a topic and can be consumed by other applications

- Continuous processing and persistence

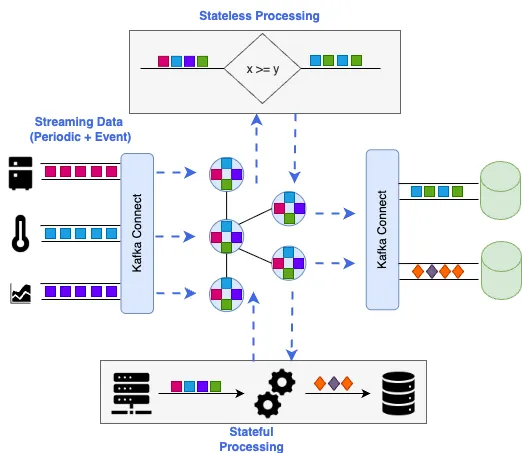

Stream Processing — Stateless vs Stateful

Stateless Processing

Data streamed from the source is directly used for decision-making, projection, and transformation. This is straightforward, fast, and real-time, as it treats each event independently and applies directly to raw data without joining to any environment data or other data models.

Characteristics

- Ideal for simple filtering, threshold-based decision-making, and straightforward ETLs.

- Very low latency, as it is independent of any system state and data model.

- Horizontal Scaling is easy, as events are independent.

- Decisions must be made on the current events; no past events are available.

Stateful Processing

The raw telemetry data is considered meaningless until it is attached to an environment. For example, a temperature reading from the THD sensor is not ready for decision-making until the system is aware of the environment in which it is installed.

Characteristics

- Supports more complex logics that need joins and aggregations, and supports window-based operations.

- Supports decision-making that is dependent on previous states and environmental data

- Horizontal scaling is complex, may require consistency, and a coordination mechanism

- Requires a mechanism to access state and maintain it for a distributed system

- Complex to manage in a distributed system, it requires expertise to scale and maintain an accurate state.

Streaming Pattern: Periodic vs Event-Based

Periodic Stream

- Data is published at a fixed interval (e.g., every 5 seconds)

- Each transmission of data keeps the current state of the equipment, usually including all monitoring parameters.

- Even if there is no change in state, it will transmit the packet.

- Useful for trend analysis and continuous condition monitoring, enables decision-making based on historical trends

- Enables asset remote monitoring systems to take proactive actions on the basis of trend analysis

- Enable Agentic-AI, ML models and predictive maintenance algorithms to learn from historical data.

Event-based Stream

- No fixed interval, Data is transmitted immediately when a specific event or condition is triggered

- Each transmission contains specific information related to event

- Transmits only the impacted parameters

- Useful for alerting and tracking alarming incidents.

- Enables monitoring systems to take reactive actions immediately

- Enables AI agents to take appropriate action.

The Joint Middleware: Bridging OT and IT

In the traditional approach, data was generated by assets, processed in batches, and then made available to other applications.

In the new architecture, the Joint Data Middleware is introduced as a common layer kept available for all the systems, and it reduces the gaps between OT and IT systems by unifying the data transformation layer while maintaining the clear separation of concerns between the two domains.

As soon as the new data is generated from the device, it is streamed upward to be utilized by the other applications. Here, Kafka-like distributed message brokers work as a nervous system for the applications and make data available among various applications, which includes:

- Decision-making based on rules — Automated rule-based engines acting on the thresholds immediately for Periodic and Event-based streams

- Real-time AI/ML processing — Enables real-time anomaly predictions, classifications, and fault detections. Also, enables Agentic AI to take reactive and proactive actions.

- Data Projection and Transformation — Raw data is transformed and utilized by all the applications for visualization and analytics.

- IT System integration — Seamless integration with enterprise systems like ERP, CRM, and other data platforms.

- Asset Real-time monitoring — Enables continuous observation and asset health monitoring.

Conclusion

The move from manual, isolated systems to a real-time, unified data model has revolutionized manufacturing industry operations by providing real-time decision-making. It reduces the duplicated effort and enables intelligent system development. By leveraging Event-driven architectures and smart processing engines, the industrial ecosystems have not only improved scalability and performance but also strengthened the foundation and made it future-ready.

In the next article, we’ll will be talking about the Agentic AI in Manufacturing: Predictive Maintenance & Operations—the industry-agnostic data intelligence layer turning real-time insights into real-world actions.

We’ll explore how Agentic AI takes the unified data streams we've built and transforms them into predictive capabilities: anticipating equipment failures before they happen, optimizing maintenance schedules without downtime bottlenecks, and automating operational workflows with AI that previously required midnight manual runs.

Imagine your industrial equipment / operations running like a well-rehearsed orchestra—each sensor, machine, and process seamlessly coordinated by intelligent agents that learn, adapt, and improve with time. This isn’t just about efficiency; it's about giving your teams peace of mind, better sleep, and fewer “emergency” notifications.

Because building a unified data architecture is just the beginning. Leveraging it with Agentic AI? Scaling it as your business expands requires a whole different game-plan—converting devices/data-sets from informative to a transformative powerhouse.

.jpg)

%20(1).jpg)

.jpg)